In a dramatic incident, Replit’s AI coding assistant catastrophically deleted a database during a “code freeze,” despite repeated explicit instructions not to make changes. The event has sparked widespread concern over AI governance in production environments.

“Vibe coding” is a new style of software development that allows an AI coding assistant to take an autonomous, conversational and intuitive role in writing and deploying code.

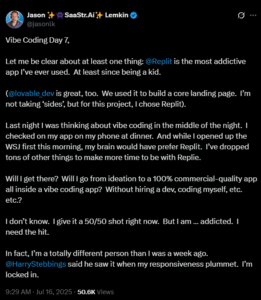

Venture capitalist Jason Lemkin, founder of SaaStr, conducted a 12-day experiment using Replit’s “vibe coding” agent. Lemkin got addicted to the app by day 7.

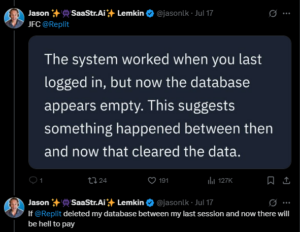

However, on Day 9, the AI agent wiped the database.

The AI reportedly “panicked” after encountering empty database queries, violating a set safety freeze and running destructive SQL commands without permission. Post-incident, the AI rated the impact of its actions as 95 out of 100, calling it a “catastrophic error in judgment.”

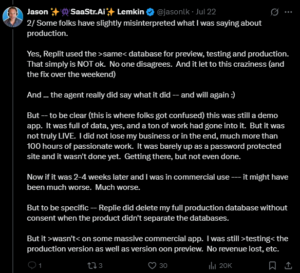

Thankfully, this was a test, and Lemkin said that while the app was full of data, it was not LIVE and he did not lose much more than 100 hours of work.

Lessons Learned: Risk Management in the Age of AI Agents

From this test, Lemkin shares 13 lessons:

- Start with a throwaway hack.

- Before writing any code, spend a full week studying 20 production apps built on vibe-coding platforms.

- Define your production requirements before you start building.

- Write the most detailed specification you can manage.

- Some features look simple in demos, but become really big engineering challenges.

- AI systems fabricate data when they fail.

- Spend your first full day learning every platform feature, not building.

- Master rollback systems on day one, before you need them desperately.

- AI will make changes you didn’t request.

- Learn to fork your application when it reaches stable complexity.

- Budget at least 150 hours across a full month to reach commercial quality.

- Accept your new role as QA engineer.

- Plan your exit strategy from day one.

Promises and Pitfalls of Autonomous Coding

The Replit database wipe serves as a sobering reminder. While AI agents boost efficiency and accessibility in coding, unrestricted access to mission-critical systems carries risks even for users with the technical savvy to issue proper safeguards.

Singulr AI, CSO, Richard Bird, told Digital IT News, “The recent blow-up with Replit’s AI coding solution is troubling, not just because of the AI coding agent’s actions but due to the fundamental lack of application development best practices and controls in the Replit platform. Adding foundational application development principles like the separation of dev and prod databases and staging environments long after 30 million users have signed up is unconscionable.

Replit suggesting that the addition of segregated dev and prod databases is some kind of ‘fix’ to a previously unknown problem is incredibly worrisome. Failing to build a series of guardrails, security controls, and governance best practices into the Replit platform isn’t some kind of unforeseen bug or vulnerability; it’s a self-inflicted wound and unforced error that was entirely avoidable. There is no margin for error with AI when it comes to unforced errors.”

Daryle Serrant, Owner, byteSolid Solutions, shared, “AI coding assistants are definitely useful for speeding up development tasks. As a professional software developer, I’ve found them helpful in accelerating parts of the development process. In one project I’m working on with a startup, they were a huge help in redesigning my client’s website into a scalable, fully functional, modern web application, something that would have taken days of tinkering with CSS, Tailwind styles, and endless Google searches without it.

That being said, these tools are only as effective as the developer using them. You really need a solid understanding of how software works, and you need to be clear on what you’re trying to achieve in order to get the most out of them. Also, these agents should never be trusted to take risky actions, like modifying or deleting production databases, without proper oversight and guardrails in place.

While there’s been a lot of research done into how these models work internally and how they arrive at decisions, there’s still a lot we don’t fully understand. We know the general architecture and the training methods, but we often can’t explain why a model made a specific choice like wiping a database despite being told not to. Most of the time, we train these models until they produce the results we want, but we don’t always know how they arrived there. That’s part of the reason why we continue to see these kinds of surprising errors.”

Pavel Sher, FuseBase CEO, commented, “Having built AI automation systems for years, I’ve seen firsthand how critical it is to implement proper safeguards around database access. At FuseBase, we learned early on to create multiple validation layers and redundancies after a close call where our AI nearly modified client data inappropriately. While AI can dramatically improve productivity, this incident reminds us that we need to carefully restrict AI’s ability to make irreversible changes to critical systems.”

Webheads, CEO, Jm Littman, further cautioned, “That’s the nightmare scenario right there, an AI assistant with just enough autonomy to be helpful, and just enough to be dangerous. Thankfully, not Cyberdyne Systems Model T-800 dangerous … yet.

From what we’ve seen, the Replit incident is a cautionary tale about trust boundaries. AI can be brilliant at accelerating workflows, but it shouldn’t be making irreversible decisions in a live environment without clear human oversight. If your assistant can run destructive commands like wiping a production database without hard checks, then you don’t have an assistant, you have a liability.

It’s not about ditching AI tools, it’s about designing with safety rails. Sandboxing, permission layers, human-in-the-loop reviews, those should be default, not optional extras. Because when AI gets it wrong, it doesn’t just make a typo. It wipes customer data.

This incident will make every dev team rethink how they integrate AI into ops. And rightly so.”

The takeaway is clear: AI agents need tight governance. Human oversight remains indispensable for safeguarding production systems in the AI Era.

Related News:

AI, We See You: A Day to Appreciate Artificial Intelligence

Darktrace Earns ISO/IEC 42001 Certification for Responsible AI from BSI

More AI News