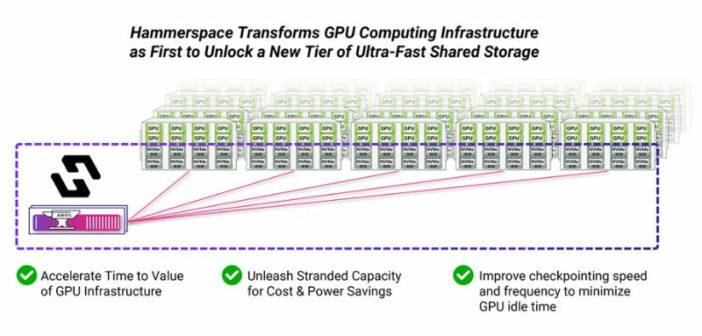

Hammerspace has launched the latest version of its Global Data Platform software, introducing a new Tier 0 shared storage solution that transforms local NVMe storage on GPU servers into ultra-fast, persistent shared storage. By integrating previously isolated NVMe storage into the Hammerspace Global Data Platform, Tier 0 delivers data to GPUs at local NVMe speeds, unlocking new levels of performance and efficiency in GPU computing and storage utilization.

With Tier 0, organizations can now fully leverage the local NVMe on GPU servers as a high-performance shared resource with the same redundancy and protection as traditional external storage. This integration makes the server local NVMe capacity part of a unified parallel global file system, seamlessly spanning all storage types with automated data orchestration for greater efficiency and flexibility.

Utilizing this otherwise unused high-performance storage, Tier 0 reduces the amount of expensive external storage systems organizations need to buy, slashes power requirements and reclaims valuable data center space. This breakthrough leverages the already sunk investment and deployed capacity in existing NVMe storage within the GPU servers, maximizing utilization and accelerating time to value.

“Tier 0 represents a monumental leap in GPU computing, empowering organizations to harness the full potential of their existing infrastructure. By unlocking stranded NVMe storage, we are not just enhancing performance—we’re redefining the possibilities of data orchestration in high-performance computing,” said David Flynn, Founder and CEO of Hammerspace. “This technology allows innovators to push the boundaries of AI and HPC, accelerating time to value and enabling breakthroughs that can shape the future.”

Accelerate Time to Value of GPU Infrastructure

By leveraging unused capacity on their GPU servers with Tier 0, organizations can get their workflows up and running in production immediately, even before configuring external shared storage systems. By harnessing the existing NVMe storage within their deployed GPU servers, companies can dramatically reduce setup time, enabling teams to start their GPU computing projects almost immediately. This swift integration allows for rapid experimentation and deployment, facilitating faster time to value as data scientists and engineers can focus on innovation rather than infrastructure.

Unleash Stranded Capacity for Cost and Power Savings

As organizations expand GPU environments for AI training, HPC, and unstructured data processing, the local NVMe storage in GPU servers is typically largely unused for the compute workflows as it is siloed and lacks shared access and built-in reliability features.

With Tier 0, Hammerspace turns isolated NVMe into a high-performance shared resource, reducing power demands for that storage capacity by approximately 95% compared with deploying external networking and storage. Accessible via a global, multi-protocol namespace, it spans silos, sites, and clouds, enabling seamless data access for users and applications. This integration keeps active datasets local to GPU servers, allows AI model checkpoints to be written locally, and automates tiering across Tier 0, Tier 1 and Tier 2 storage.

Tier 0 enables the storage capacity in GPU servers to be incorporated into the Hammerspace Global Data Platform in minutes, making this storage capacity available almost instantly. Through Hammerspace Data Orchestration, data protection, tiering, and global data services are fully automated across on-premises and cloud storage.

By seamlessly integrating local NVMe as part of a unified global file system, Hammerspace makes files and objects stored on GPU servers available to other clients and orchestrated intelligently across all storage tiers. And, as with all Hammerspace innovations, no proprietary software or modifications are required on the client or GPU server—everything operates within the Linux kernel using standard protocols.

Maximize GPU Efficiency with Lightning-Fast Checkpointing

Hammerspace Tier 0 rewrites the rules for storage speed, unlocking a new era of efficiency in GPU computing. By streamlining data handling and accelerating checkpointing times up to 10x faster than external storage, Tier 0 eliminates the lag that’s been holding back GPU utilization. With every second shaved off checkpointing, GPU resources are freed up to tackle other tasks, translating to real cost savings and up to 20% more output from existing investments. In large GPU clusters, this adds up to millions in recaptured value—an essential breakthrough for any organization serious about harnessing the power of high-performance computing.

Unveiling Hammerspace Global Data Platform Software v5.1

The latest version of Hammerspace software also provides a number of additional new features and capabilities that improve performance, connectivity and ease of use, including:

- Native client-side S3 protocol support – Provides unified permissions and namespace with NFS & SMB file protocols with extreme scalability.

- Significant platform performance improvements, with 2x for metadata and over 5x for data mobility, data-in-place assimilation and cloud-bursting.

- Additional resiliency features with three-way HA for Hammerspace metadata servers.

- Automated failover improvements across multiple clusters and sites.

- New Objectives (policy-based automation) & refinements to existing Objectives.

- Significant updates to the Hammerspace GUI, including new tile-based dashboards that may be customized per user, with richer controls.

- Enhancements to client-side SMB file protocol.

- High availability when deployed in Google Cloud Platform.

The advancements in Hammerspace’s Global Data Platform are more than just a revolution in data storage; they are a catalyst for innovation in GPU computing. By transforming how organizations leverage their infrastructure, we’re paving the way for unprecedented advancements in AI and high-performance computing. As we continue to orchestrate the next data cycle, Hammerspace is poised to lead the charge into a new era of efficiency and performance.

Supporting Quotes

“The demand for compute power is constantly growing as use cases for HPC rapidly expand. Yet, budget and power remain constraints. Hammerspace’s Tier 0 unlocks a new level of efficiency, allowing us to reallocate resources already within our compute rather than investing in additional storage infrastructure,” said Chris Sullivan, Director of Research and Academic Computing at Oregon State University. “This approach not only accelerates research by maximizing existing infrastructure but also aligns with our sustainability goals by reducing energy consumption and data center footprint.”

“The advantages of Hammerspace’s new Tier-0 storage technology are quite clear,” said Russ Fellows, VP of Futurum Group Labs. “This will enable companies to leverage their local storage to accelerate AI workloads while providing the redundancy, availability and scale that Hammerspace is known for. We believe this technology will be rapidly adopted based upon its open-source foundations and the unmet need organizations have for high-performance data storage for AI workloads.”

“Hammerspace’s new Tier 0 technology is an ingenious leap forward for GPU environments. By transforming local NVMe into high-performance shared storage, Tier 0 solves substantial storage performance, networking, power, cooling, and cost problems for IT organizations trying to get their arms around GPU-based HPC, GenAI and LLMs. Its capabilities empower them to redirect resources to where they matter most — fueling more GPUs,” said Marc Staimer, CEO of Dragon Slayer Consulting. “This approach not only reduces infrastructure costs and power consumption but, more importantly, accelerates time to insights, making it an essential innovation for the future of AI and HPC.”

“Integrating Hammerspace’s Tier 0 storage into GPU orchestration environments brings a transformative leap in performance and efficiency for our customers,” said Matthew Shaxted, Founder and CEO of Parallel Works. “By unlocking local NVMe storage within GPU servers as a high-speed, shared resource, Tier 0 maximizes the compute potential of every GPU cycle. This innovation enables our customers to run demanding workloads, like AI training and HPC, faster and more efficiently than ever, with streamlined data access across environments. Together, Hammerspace and Parallel Works are empowering organizations to accelerate their AI and data-driven initiatives with the bottlenecks of traditional decentralized infrastructure.”

“We have many customers that are deploying large GPU clusters, and by making the local GPU server storage available as a new tier of high-performance shared storage, Hammerspace is adding a huge value to these customers,” says Scot Colmer, Field CTO, AI of Computacenter. “The ROI of the Hammerspace Tier 0 solution is really compelling — delivering massive cost savings and power savings to our customers and decreasing GPU idle time.”

Learn more about the new tier of ultra-fast shared storage in Hammerspace’s blog.

Related News:

Cisco’s AI Servers are Purpose-Built for GPU-Intensive AI Workloads

Scality RING XP, Fastest All-Flash Object Store for High-Performance AI